Natural Language Processing

This lesson will introduce you to human-computer interaction with natural language processing.

What is NLP?

Natural Language Processing (or NLP) is a field of artificial intelligence in which computers analyze, understand, and derive meaning from human language in a smart and useful way.

NLP can be utilized for understanding what people are writing about, translating text from one language to another, recognizing spoken words and turning them into text, and much more.

Now let's take a look at some of the most common NLP concepts.

Tokenization

Tokenization is when you divide a sentence into chunks of words. Usually it is the first task performed in natural language processing.

For example, take the sentence

My name is David. Does this sentence contain a name? Does it contain a country? You might have a separate list of names that you can check each word against to see if it is a name. How many nouns does the sentence have? Does it have any verbs? If you want to understand a sentence, you need to break it down into its words.

Tokenization can be performed at two levels: word-level and sentence-level.

Word-level Tokenization

Word-level tokenization returns a set of words from a sentence.

E.g. tokenizing the sentence

My name is Davidreturns the following set of words:

words = ['My', 'name', 'is', 'David']

From this breakdown, it would be easy for the computer to look through the list to find

Davidand know that the sentence contains a name.

At the sentence level, tokenization returns a set of sentences from a document.

E.g. tokenizing the document with the text:

My name is David. I am 21 years old. I live in the UK.returns the following set of sentences:

S1 =

S2 =

S3 =

My name is David.

S2 =

I am 21 years old.

S3 =

I live in the UK.

By breaking down these text into sentences, you can make decisions about each sentence individually. For example, sentence 1 is 4 words long, while sentences 2 and 3 are both 5 words long. Breakdown down text into sentences also then allows you to break down each sentence into its various words. By breaking down this text into sentences and each sentence into words, you can tell that sentence 1 contains a name and sentence 3 contains a country.

Stop Word Removal

Stop words are words that do not provide any useful information for your data analysis.

E.g. if you are developing an emotion detection software, words such as

is,

amand

thedo not give information related to emotions.

If you have a sentence that says

I am feeling happy today,

Iand

amdo not give emotion-related information. However,

Imay be important in identifying who is the subject of the sentence.

There is no universal list of stop words to remove, it just depends on your application.

In NLP, every word needs processing. Removing stop words saves processing time when you have a lot of words to process. It also prevents you from incorrectly training your machine learning algorithm.

For example, when you are writing the emotion detection software referenced above, and try to train a machine learning algorithm on a set of text, it might mistakenly identify sentences that use the word

theas having a particular emotion, even though you know it doesn't impact the sentence.

In addition, it will take up the machine learning algorithm's processing power to try to interpret which emotion

theis supposed to represent. Before you start performing long-running programs on text data, it is usually a good idea to find the top 50 or 100 words in the document, and see if any of them would be good to remove from your analysis and algorithm training.

Stemming

Stemming is the process of removing suffixes from words in order to normalize them and reduce them.

E.g. Stemming the words

computational,

computed, and

computingwould all result in

computsince this is the non-changing part of the word.

Why would you want to do this? This enables you to summarize your data and put words into common groups. This can be helpful for machine learning algorithms. If you wanted a machine learning algorithm to detect whether a person was taking about computers, the algorithm will be heavily reinforced by

comput, since that is a common stemmed word across different texts talking about computing.

If you don't stem the word, the algorithm will believe that

computational,

computed, and

computingall represent completely different things, even though you as a person know they are very similar.

When thinking about which words to stem, you want to look at a word list that is the complete opposite of your stop word list. Instead of finding the most frequently used words, find words that only appear once or twice inside of your text. If stemming those words won't change how you think about the meaning of the text for your analysis, stemming them will improve the computer's ability to interpret the text.

Lemmatization

Lemmatization is similar to stemming but it takes context into account while stemming the words.

It is more complex as it needs to look up and fetch the exact word from a dictionary to get its meaning.

E.g. for the word

worse, lemmatization returns

badas the context of the word is taken into account. Therefore it knows that

worseis an adjective and is the second form of the word

bad.

However, stemming will return the word worse as it is.

Both stemming and lemmatization are useful for finding the semantic similarity between different pieces of texts.

There are a huge number of words in the English language. If your machine learning algorithm needed to know over 100,000 different words and try to summarize texts, it would be very difficult to process and draw conclusions.

If you instead simplify the English language down into basic words, your program will be able to process information much faster. To see a basic list of words, you can take a look at the Wikipedia Basic Words List.

Parts of Speech

Each word in a sentence has a specific role. E.g.

boyis a noun and

eatis a verb. These are the parts of speech (or POS).

An important NLP task is assigning parts of speech tags to the words.

POS tagging helps to construct grammatically correct sentences and identify contexts.

A POS tagger labels words with their corresponding parts of speech.

For instance,

laptop,

mouse, and

keyboardare tagged as nouns.

eatingand

playingare verbs while

goodand

badare tagged as adjectives.

This is a hard task to perform because many words have multiple meanings and so could be different parts of speech. This depends on context.

Named Entity Recognition

Named entity recognition refers to the process of classifying entities into predefined categories such as person, location, organization, vehicle etc.

For instance in the sentence

Mark Zuckerberg is the CEO of Facebook.A typical named entity recognizer will return following information about this sentence:

Mark Zuckerberg -> Person

CEO -> Position

Facebook -> Organization

Named entity recognition is important for topic modeling where a program can automatically detect the topic of a document based on the entities within.

NLP Applications

NLP is an exciting area for machine learning because it is still so challenging to write programs that can accurately interpret text. Reading comes naturally to humans after years of practice, but we ask computers to interpret text in a couple of minutes and come back with answers.

Text Classification

If you have an email account, you might occasionally get emails called Spam. These emails may try to sell you something you don't want or promise you money if you submit payment to an address.

Below is an example of part of a spam email, from a Kaggle Spam Email Dataset.

A POWERHOUSE GIFTING PROGRAM You Don't Want To Miss!

GET IN WITH THE FOUNDERS!

The MAJOR PLAYERS are on This ONE

For ONCE be where the PlayerS are

This is YOUR Private Invitation

EXPERTS ARE CALLING THIS THE FASTEST WAY

TO HUGE CASH FLOW EVER CONCEIVED

Leverage $1,000 into $50,000 Over and Over Again

GET IN WITH THE FOUNDERS!

The MAJOR PLAYERS are on This ONE

For ONCE be where the PlayerS are

This is YOUR Private Invitation

EXPERTS ARE CALLING THIS THE FASTEST WAY

TO HUGE CASH FLOW EVER CONCEIVED

Leverage $1,000 into $50,000 Over and Over Again

Okay, this message is full of weird capitalized text and claims of letting you make lots of money. Natural language processing can be used to look through hundreds of thousands of emails like this so that they can be categorized as spam before you ever have to read them.

By classifying text with computers, you free up people to read what they want to read, and ignore emails not worth paying attention to.

Text Generation

Text generation takes in source content, such as a book or a television or movie script, and then generates text based on the words present in that text.

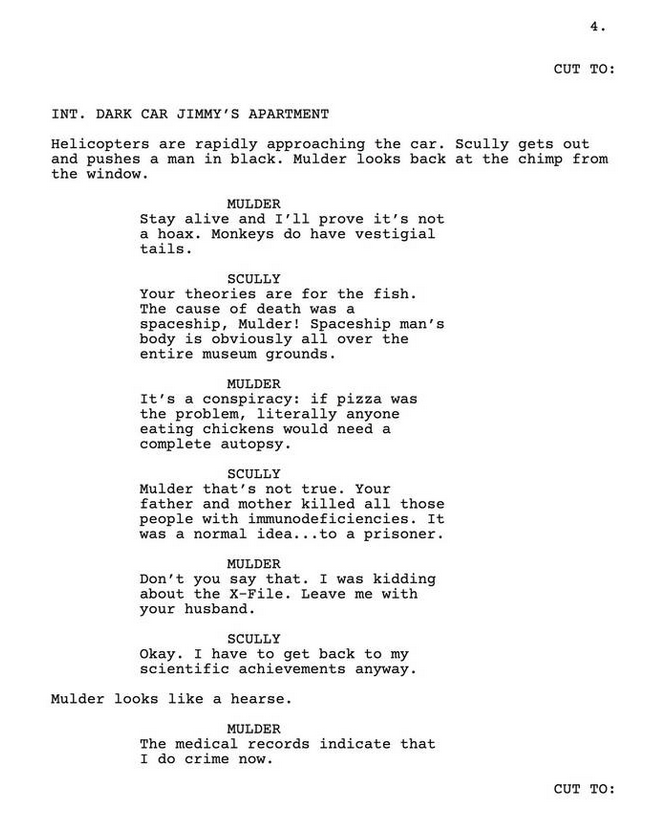

For example, a text generation algorithm generated a script for a fake episode of the TV show The X-Files by reading text from existing scripts.

While this script isn't going to win any major writing awards, the text follows a basic logic, including both character dialogue and scene descriptions.

Text generation tends to work best at smaller scales, since computers have a hard time thinking about the broader themes in writing.