Neuroblast Game AI

This lesson will teach you how to set up an intelligent AI system for Neuroblast that involves training the AI.

You can use your game that you created in your earlier lessons, or download the Neuroblast Game Template and open that project in Spyder.

The Brain System

Before we dive into the code, we're going to set up an understanding of how we're going to have our AI brain work.

Currently, our AI waits for the cooldown on firing to finish, and then fires immediately. When we refactor our system, we'll implement this system as our default.

For our brains to make their decision whether or not to fire, they need to have some sort of input related to the world to make their decision. Our inputs will be composed of 4 parts:

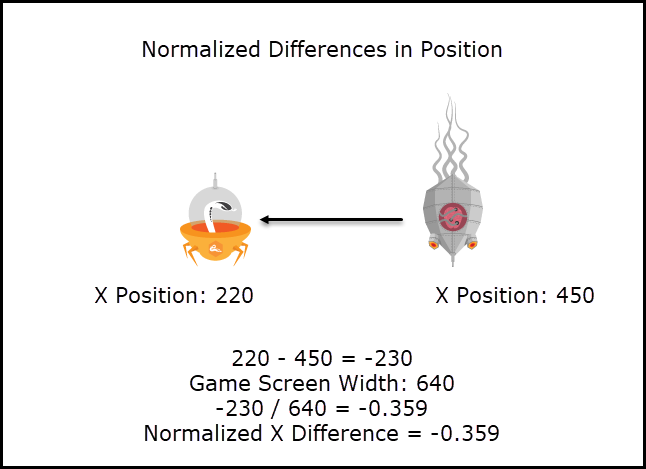

1. The normalized difference between the X value of the enemy ship's position and the X value of the player ship's position.

2. The normalized difference between the Y value of the enemy ship's position and the Y value of the player ship's position.

3. The normalized difference between the X velocity of the enemy ship and the X velocity of the player's ship

3. The normalized difference between the Y velocity of the enemy ship and the Y velocity of the player's ship

In our play space, we have a world of 640 pixels by 720 pixels. Here is an example of normalized values:

1. The Player's x position is 220.

2. The Enemy's x position is 450.

3. The difference of the positions is -230, which means the player is 230 pixels to the left of the player.

4. the width of the game space is 640, so -230/640 is -0.359.

5. -0.359 is the normalized value, because it is between -1 and 1. It indicates the player is left of the enemy (since it is negative), and is around 36% of the total length of the play space to the left of the enemy.

Having normalized values is very important to machine learning and AI. Otherwise, it will treat difference types of variables with different weights inside the machine learning algorithm.

The differences between position and velocity are not the only variables you could use, but they will be easy to track here, and it is easy to imagine which results would be better.

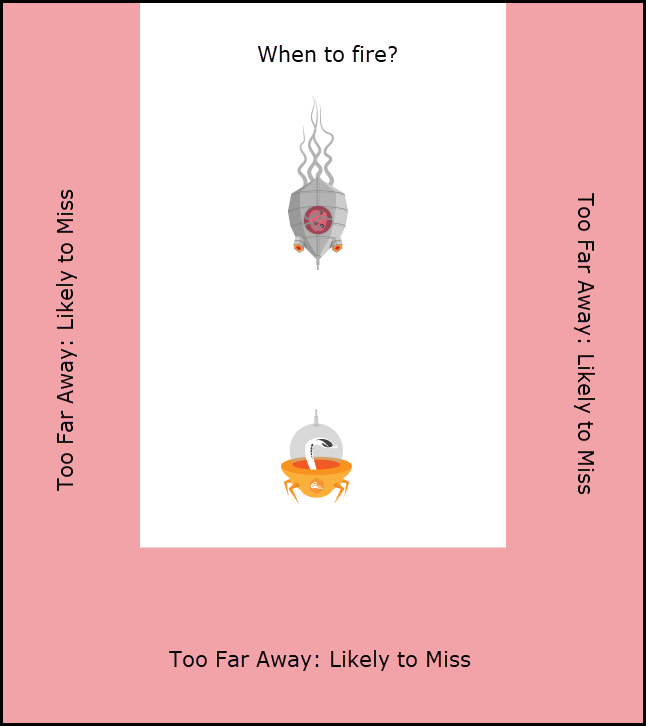

1. If the Player's ship is very close to the enemy ship, it's more likely that the enemy's shot will hit if it fires right now.

2. If the Player's ship is moving towards the enemy ship, it's more likely that the enemy's shot will hit if it fires right now.

With that out of the way, let's get started creating a very basic brain system.

Creating a Basic Brain

Our basic brain is going to be a replica of our current AI system, but will include a lot of additional setup that will be helpful when we make more advanced AI systems.

To create our basic brain system, we'll alter the following sections of our code:

1. Create a new file to hold the Brain class and set up a basic brain.

2. Update the Enemy class to use a brain to make a decision

3. Update the Play class to set up the enemies with our basic brain.

To start, create a new file called brain.py. We will create our top level Brain class, and there will be a lot of functions not yet implemented. That's okay, we'll implement them when we make more specific brains.

from game_utils import display_text

import constants

class Brain:

def __init__(self):

self.trained = True

self.brain_name = "AI: Fire Constantly"

self.mapShots = {}

self.mapHits = {}

# Should I fire right now?

def fire_decision(self, player_variables):

return True

# Track a fired bullet for learning

def add_shot(self, bullet, player_variables):

self.mapShots[bullet] = player_variables

# Track hit bullets

def record_hit(self, bullet):

self.mapHits[bullet] = 1

# Tracked missed bullets

def record_miss(self, bullet):

self.mapHits[bullet] = 0

# Basic Brain never learns

def train(self):

pass

def draw(self, screen):

display_text(self.brain_name, 960, 60, constants.WHITE, screen)

Next, we'll update the Enemy class to handle using a brain to make decisions. The enemies class is in actors.py.

class Enemy(Ship):

# Adding Brain input for ship, it needs to use a brain now

def __init__(self, bullet_group, brain):

#

super(Enemy, self).__init__()

# Store the brain for use later

self.brain = brain

#

self.x = randrange(0,450)

The update method for the enemy class is going to be more complicated, because we need to get our normalized values and use those for our decision

# Update inputs to update to include player position and velocity

def update(self, screen, event_queue, delta_time, player_position, player_velocity):

#

if not self.alive():

return

self.velx = math.sin((pygame.time.get_ticks()-self.spawntime)/1800) * 40

self.x += self.velx * delta_time

self.y += self.vely * delta_time

self.rect.center = (self.x, self.y)

self.image.fill((0,0,0))

self.idle_sequence.update(self.image,(0,0))

# Add code to figure out the normalized differences between this enemy and the player

player_x, player_y = player_position

player_velx, player_vely = player_velocity

x_difference = (self.x - player_x) / constants.game_width

y_difference = (self.y - player_y) / constants.window_height

x_velocity_difference = (self.velx - player_velx) / 60

y_velocity_difference = (self.vely - player_vely) / 60

player_variables = (x_difference, y_difference, x_velocity_difference, y_velocity_difference)

#

if not(self.can_fire):

self.cooldown_timer += delta_time

if self.cooldown_timer>self.shot_cooldown:

self.can_fire = True

self.cooldown_timer = 0

# Have the brain make the decision whether or not to fire based on the player variables

if self.can_fire:

if (self.brain.fire_decision(player_variables)):

Bullet(self.x,self.y+96,constants.RED,self.bullets)

self.can_fire = False

#

Next, we'll update the Play state in the gamestats.py file to spawn enemies using our basic brain, and give the enemy's update method the information it needs to make a decision whether to fire or not. We'll start by importing our brain.

import pygame from game_utils import display_text from game_utils import load_font import constants from actors import Player from actors import Enemy import brain

Next, we'll be adding code so that the enemy can make decisions on its own. We'll start by giving the enemy a brain when it spawns in the Play class.

class Play(GameState):

def __init__(self):

self.userBullets = pygame.sprite.Group()

self.player = Player(self.userBullets)

self.userGroup = pygame.sprite.Group()

self.userGroup.add(self.player)

self.enemies = pygame.sprite.Group()

self.enemyBullets = pygame.sprite.Group()

# Create a basic brain and assign it to the enemy

self.play_brain = brain.Brain()

self.enemy = Enemy(self.enemyBullets, self.play_brain)

#

self.enemies.add(self.enemy)

self.spawntimer = 0

self.spawnbreak = constants.enemy_spawn_rate

self.score = 0

Lastly, we'll pass the player information to the enemy's update method and make sure that any new enemies spawned have the brain too. We're also adding an additional draw, to call the brain's draw method on every frame.

def update(self, screen, event_queue, delta_time, clock):

self.player.update(screen, event_queue, delta_time)

self.userGroup.draw(screen)

# Get the player's information and pass it to the enemy's update method

player_position = (self.player.x, self.player.y)

player_velocity = (self.player.velx, self.player.vely)

self.enemies.update(screen, event_queue, delta_time, player_position, player_velocity)

#

self.enemies.draw(screen)

self.enemyBullets.update(delta_time)

self.enemyBullets.draw(screen)

self.userBullets.update(delta_time)

self.userBullets.draw(screen)

# Draw the brain on the screen

self.play_brain.draw(screen)

#

enemies_hit = pygame.sprite.groupcollide(self.enemies,self.userBullets,False,True)

for enemy, bullets in enemies_hit.items():

enemy.TakeDamage(constants.player_bullet_damage)

for b in bullets:

self.score += constants.score_for_damage

player_hit = pygame.sprite.spritecollide(self.player,self.enemyBullets, True)

for bullet in player_hit:

self.player.TakeDamage(constants.enemy_bullet_damage)

self.spawntimer += delta_time

if self.spawntimer > self.spawnbreak:

# Spawned enemies should use the brain we set up

self.enemies.add(Enemy(self.enemyBullets, self.play_brain))

#

self.spawntimer = 0

If you test the game, and you made all the updates correctly, the enemies will act the same way as before. The only difference is that the name of the AI method will appear in the right side window.

For each different brain, we're going to implement that draw method a little differently, to give the player some insight into how the enemies are making their decisions.

Creating a Static Brain

To get the hang of creating different brains, we're going to create another brain. This one will be simple, almost like our basic brain.

Our static brain will have a very simple logic system: Only fire when the player is below the enemy. It doesn't make sense for the enemy to fire if the player is above them, since it is unlikely they will hit the player.

We're going to make two updates:

1. Create a new StaticBrain class with a different way of implementing the fire_decision() method.

2. Update the Play state to use our new class.

The power of using an object-oriented approach for our game's AI is that once we have a basic AI implementation set up, creating new types of AI and adding them to our game will be fairly easy.

To start, here is the code for the new class. Add this class to the brain.py file. In the fire_decision(), we make the decision to fire if the player is below the enemy.

class StaticBrain(Brain):

def __init__(self):

super(Brain, self).__init__()

self.brain_name = "AI: Fire When Player Below"

def fire_decision(self, player_variables):

y_distance = player_variables[1]

if (y_distance < 0):

return True

else:

return False

Next, update the Play state in gamestates.py to use our new brain

class Play(GameState):

def __init__(self):

self.userBullets = pygame.sprite.Group()

self.player = Player(self.userBullets)

self.userGroup = pygame.sprite.Group()

self.userGroup.add(self.player)

self.enemies = pygame.sprite.Group()

self.enemyBullets = pygame.sprite.Group()

# Update to use our new brain

self.play_brain = brain.StaticBrain()

#

self.enemy = Enemy(self.enemyBullets, self.play_brain)

self.enemies.add(self.enemy)

self.spawntimer = 0

self.spawnbreak = constants.enemy_spawn_rate

self.score = 0

Play the game, and now the AI will only fire when the player's ship is below the enemy. Now, our enemy is starting to think.

But that's not all we want to do, we also want to have the enemy develop its decision-making ability by training it.

The Training Mode

We're going to set up the alternate game mode, the

Trainingmode of our game.

To do that, we're going to make a couple of updates in different places.

1. We're going to set up the Menu class to set up the gameplay with a variable when to know whether the Play state is in training mode or not

2. We'll set up the trained brain to be stored inside of game_utils.py so we can retrieve it when going to and from different game states.

3. We'll update the Play class with some alternate logic related to the training mode.

4. We'll update the Enemy class to have some different logic related to the training mode

5. We'll update the Bullet class to record whenever a bullet misses the player.

6. Lastly, we'll update the StaticBrain class to show some information about what it is learning.

We'll start with the Menu class, in the update() method, add code related to determining whether or not the game will be played in training mode.

for event in event_queue:

if event.type == pygame.KEYDOWN:

if event.key == pygame.K_DOWN:

self.menu_selection -= 1

#wrap from bottom to top

if self.menu_selection == -1:

self.menu_selection = 2

if event.key == pygame.K_UP:

self.menu_selection += 1

#wrap from top to bottom

if self.menu_selection == 3:

self.menu_selection = 0

# New Logic: If the "Play" mode is selected, add the False argument

if event.key == pygame.K_RETURN:

if self.menu_selection == 2:

next_state = Play(False)

elif self.menu_selection == 1:

next_state = Play(True)

#

else:

#Exit the game

next_state = None

return next_state

Next, we'll add the variable to store the brain inside of game_utils.py

import pygame

import pygame.freetype

# Add the trained_brain variable

trained_brain = None

#

# Utility functions

def load_font(size):

global font

font = pygame.freetype.Font('font/De Valencia (beta).otf', size)

def display_text(text, x, y, color, surface):

global font

text_color = pygame.Color(*color)

text = font.render(text, text_color)

text_position = text[0].get_rect(centerx=x, centery=y)

surface.blit(text[0], text_position)

Next, we'll update the Play class in gamestates.py to use this variable in its initialization. First, import the game_utils module, since we're going to use our trained_brain storage here.

import pygame from game_utils import display_text from game_utils import load_font import constants from actors import Player from actors import Enemy import brain import game_utils

Next, in the initialization of the Play class, we'll either use a brain we've already trained, or set up a new brain to use. We're also going to set up a cooldown timer for our training system, so we'll retrain our system every 3 seconds to see the computer learning in real time.

You can update the training timer to retrain more frequently, but the more frequently you try to retrain the AI system, the slower the game will run.

class Play(GameState):

# The initialization will now take in the "training_mode" variable

def __init__(self, training_mode):

#

self.userBullets = pygame.sprite.Group()

self.player = Player(self.userBullets)

self.userGroup = pygame.sprite.Group()

self.userGroup.add(self.player)

self.enemies = pygame.sprite.Group()

self.enemyBullets = pygame.sprite.Group()

# Use an existing brain, or set up a new brain

self.training_mode = training_mode

if game_utils.trained_brain:

self.play_brain = game_utils.trained_brain

else:

self.play_brain = brain.StaticBrain()

#

self.enemy = Enemy(self.enemyBullets, self.play_brain)

self.enemies.add(self.enemy)

self.spawntimer = 0

self.spawnbreak = constants.enemy_spawn_rate

# Time for retraining the system

self.training_timer = 0

self.retrain_time = 3

#

self.score = 0

Now, in the update() method, we'll make 3 changes.

1. Let the enemy objects know whether or not we're in training mode

2. Record all hits into our brain, and have the player not take damage in training mode

3. Store our brain when we leave the training mode

def update(self, screen, event_queue, delta_time, clock):

self.player.update(screen, event_queue, delta_time)

self.userGroup.draw(screen)

player_position = (self.player.x, self.player.y)

player_velocity = (self.player.velx, self.player.vely)

# Have enemies take in whether or not the game is in training mode

self.enemies.update(screen, event_queue, delta_time, player_position, player_velocity, self.training_mode)

self.enemies.draw(screen)

#

self.enemyBullets.update(delta_time)

self.enemyBullets.draw(screen)

self.userBullets.update(delta_time)

self.userBullets.draw(screen)

self.play_brain.draw(screen)

enemies_hit = pygame.sprite.groupcollide(self.enemies,self.userBullets,False,True)

for enemy, bullets in enemies_hit.items():

enemy.TakeDamage(constants.player_bullet_damage)

for b in bullets:

self.score += constants.score_for_damage

# When the player is hit, update the logic to store hits in the brain

# Also, only take damage in the play mode, not in the training mode

player_hit = pygame.sprite.spritecollide(self.player,self.enemyBullets, True)

for bullet in player_hit:

self.play_brain.record_hit(bullet)

if not self.training_mode:

self.player.TakeDamage(constants.enemy_bullet_damage)

#

# Retrain the brain every 3 seconds

self.training_timer += delta_time

if (self.training_timer > self.retrain_time):

self.play_brain.train()

self.training_timer = 0

#

self.spawntimer += delta_time

if self.spawntimer > self.spawnbreak:

self.enemies.add(Enemy(self.enemyBullets, self.play_brain))

self.spawntimer = 0

for event in event_queue:

if event.type == pygame.KEYDOWN:

if event.key == pygame.K_ESCAPE:

# Update the escape key press to have it save the brain

self.play_brain.train()

game_utils.trained_brain = self.play_brain

return Menu()

#

# Will display the score and health variables at the top of the screen

display_text("Score: "+str(self.score), 200, 20, constants.WHITE, screen)

display_text("Health: "+str(self.player.health), 350, 20, constants.WHITE, screen)

#

return self

Next, go to the Enemy class in actors.py and we'll add information related to training mode.

For the training logic, when the enemy is in training mode, it will fire randomly at the player. That way, it can create a good sample set to use to create its logic for firing decisions.

We'll update the update() method of the class to account for this.

# The arguments to the method have been updated to include "training_mode"

def update(self, screen, event_queue, delta_time, player_position, player_velocity, training_mode):

#

if not self.alive():

return

self.velx = math.sin((pygame.time.get_ticks()-self.spawntime)/1800) * 40

self.x += self.velx * delta_time

self.y += self.vely * delta_time

self.rect.center = (self.x, self.y)

self.image.fill((0,0,0))

self.idle_sequence.update(self.image,(0,0))

player_x, player_y = player_position

player_velx, player_vely = player_velocity

x_difference = (self.x - player_x) / constants.game_width

y_difference = (self.y - player_y) / constants.window_height

x_velocity_difference = (self.velx - player_velx) / 60

y_velocity_difference = (self.vely - player_vely) / 60

player_variables = (x_difference, y_difference, x_velocity_difference, y_velocity_difference)

if not(self.can_fire):

self.cooldown_timer += delta_time

if self.cooldown_timer>self.shot_cooldown:

self.can_fire = True

self.cooldown_timer = 0

if self.can_fire:

# If the computer is in training mode, every frame there is a 1 in 20 chance the enemy will fire

# We're also going to pass the brain to the bullet, so that when the bullet misses it can record that miss to the brain

if training_mode:

if (randrange(0, 100) < 5):

bullet = Bullet(self.x,self.y+96,constants.RED,self.bullets, self.brain)

self.brain.add_shot(bullet, player_variables)

self.can_fire = False

else:

if (self.brain.fire_decision(player_variables)):

bullet = Bullet(self.x,self.y+96,constants.RED,self.bullets, self.brain)

self.brain.add_shot(bullet, player_variables)

self.can_fire = False

#

We're also going to update the Bullet class to use the brain to record misses.

class Bullet(pygame.sprite.Sprite):

# On initialization, it can take in a brain variable. When the player makes bullets, it won't store them in a brain

def __init__(self, x, y, color, container, brain = None):

#

pygame.sprite.Sprite.__init__(self, container)

self.spritesheet = pygame.image.load("art/python-sprites.png")

self.image = pygame.Surface((16,16), flags=pygame.SRCALPHA)

self.rect = self.image.get_rect()

basex = 423

# Enemy bullets will be red, player bullets will be green

if color==constants.RED:

basex += 96

self.speed = constants.enemy_bullet_speed

self.direction = constants.enemy_bullet_direction

else:

self.speed = constants.player_bullet_speed

self.direction = constants.player_bullet_direction

# Generate the sprite image from spritesheet

ssrect = pygame.Rect((basex,710,16,16))

self.image.blit(self.spritesheet,(0,0),ssrect)

self.rect = self.image.get_rect()

self.rect.center = (x, y)

#Set up the brain to be used later

self.brain = brain

#

def update(self, delta_time):

(x, y) = self.rect.center

y += self.direction[1] * self.speed * delta_time

x += self.direction[0] * self.speed * delta_time

self.rect.center = (x, y)

# If the bullet leaves the frame, destroy it so we don't have to track it anymore

if y <= 0 or y >= constants.window_height or x <= 0 or x >= constants.game_width:

# When you leave the visible window, and you haven't yet hit the player, record a miss in the brain

if self.brain:

self.brain.record_miss(self)

#

self.kill()

Finally, we're going to update our StaticBrain class to show information about what it is learning.

First, import pygame to the brain.py module, since we're going to be doing advanced drawing operations.

from game_utils import display_text import constants # import pygame import pygame # class Brain:

Next, we'll update the draw() method of the Brain class in brain.py to refresh the screen every time it is drawn.

def draw(self, screen):

# This code will put a black background on the right side of the screen for us to draw over

surf = pygame.Surface((640,720))

surf.fill((0,0,0))

screen.blit(surf,(640,0))

#

display_text(self.brain_name, 960, 60, constants.WHITE, screen)

Then, we'll create a train() method for the StaticBrain class, as well as update its draw() method to show more information.

Right now, our brain doesn't actually use this information to determine how it fires, but we'll do that in the next part of this lesson.

class StaticBrain(Brain):

# Initialization updated to store variables about number of hits and misses

def __init__(self):

Brain.__init__(self)

self.brain_name = "AI: Fire When Player Below"

self.total_bullets = 0

self.total_hits = 0

self.total_misses = 0

#

# Our "training" will simply be counting the number of total shots, total hits, and total misses

def train(self):

self.total_bullets = len(self.mapShots)

self.total_hits = sum(self.mapHits.values())

self.total_misses = len(self.mapHits.values()) - self.total_hits

#

# "Drawing" the brain will just be showing counts of shots, hits, and misses

def draw(self, screen):

super().draw(screen)

display_text("Total Bullets Fired: " + str(self.total_bullets), 960, 90, constants.WHITE, screen)

display_text("Total Bullets Hit: " + str(self.total_hits), 960, 120, constants.WHITE, screen)

display_text("Total Bullets Missed: " + str(self.total_misses), 960, 150, constants.WHITE, screen)

#

Now we can play our game and see it in action:

1. Select

Trainfrom the main menu. Enemies will fire semi-randomly, not following the fire decision logic of our brain.

2. Watch the brain information on the right side of the window update based on shots fired, hit, and missed

3. In training mode, you won't be damaged.

4. Press Escape, and go back to the main menu

5. Select the "Play" mode. In this mode, enemies will follow the fire decision logic, but the AI information will still continue to update, starting from where the training left off.

With all this in place, we can finally create an AI that actually performs real learning based on the state of the game.

The Learning Structure

Before we dive into coding a learning brain, it is wise to try to imagine how we want the AI to learn to fire. We want to try to answer a question: How do we know what combinations of variables are likely to produce a hit?

In the Static Brain, we hardcoded the decision model that if the player was below the enemy, fire a bullet. But there's more to the problem than that.

Here's the logical structure we're going to use for our learning brain:

1. We will use 2 inputs for our learning, the X position difference and the Y position difference (to make things easier to understand for now, we'll not use the differences in velocities)

2. We'll make an assumption there is a range of position differences that are more likely to generate a hit. For example, there is some Y difference value where the player is below the enemy, and also the player is close to the enemy, that a shot is more likely to hit.

3. That means there are two values we want to track, the X difference and the Y difference, to find the optimal position to take a shot.

4. While there may be an optimal position in our calculations, we want to use a range around that optimal position, so that the enemy will take a shot if it is close to the optimal position.

5. One final note: the faster the enemy can fire, the less it needs to care about the optimal position. If the enemy cannot fire very fast, it needs to wait until it lines up a really good shot before it fires. We'll hardcode our fire rate for now, but after setting up the AI play around with different fire rates and different ranges to see if you can come up with one that works well for your design.

We expect our AI's logic to roughly look like this:

Of course, we could hardcode our optimal position calculation if we wanted to. You could manually test out difference optimal position difference values until you found one that worked.

But this method has one major flaw: You don't know how your players are going to play the game. Once your player learns how the enemy makes a decision, they would be able to outsmart it every time.

By having the enemy AI train against the player, it can find the optimal position for firing for that player, and continually update that optimal position logic as the player's behavior changes.

With that knowledge, let's make our new AI!

Simple Learning Brain

To set up our learning brain, we'll do the following:

1. Add our range around the optimal position to fire as a constant in constants.py

2. Create a new LearningBrain class that will store the X and Y position differences of successful hits

3. Train the brain by calculating the average difference for all successful hits

4. Use the trained averages combined with our acceptable range to fire to make a decision on whether or not to fire.

5. Update the Play state to use our new brain.

First, we'll start by adding our range to the constants.py file.

score_for_damage = 50 starting_health = 100 player_bullet_damage = 10 enemy_bullet_damage = 5 # It can be 0.25 below or above the optimal number, in normalized units learning_brain_acceptable_range = 0.25 #

Next, we'll create the LearningBrain class inside of the brain.py file.

This class will create lists of all the hits, and when it is trained it will find the optimal positions from those hits.

class LearningBrain(Brain):

def __init__(self):

Brain.__init__(self)

self.brain_name = "AI: Find Optimal Fire Position"

self.trained = False

self.fire_x_optimal = 0

self.fire_y_optimal = 0

self.max_distance_from_optimal = constants.learning_brain_acceptable_range

self.total_bullets = 0

self.total_hits = 0

self.total_misses = 0

self.all_x_hits = []

self.all_y_hits = []

We're going to change things around a little bit with recording the hits. To make things simpler, on every hit we'll store the X and Y position differences in our lists.

def record_hit(self, bullet):

Brain.record_hit(self, bullet)

differences = self.mapShots[bullet]

self.all_x_hits.append(differences[0])

self.all_y_hits.append(differences[1])

Training the AI will take the average of the X position differences and Y position differences.

def train(self):

self.total_bullets = len(self.mapShots)

self.total_hits = sum(self.mapHits.values())

self.total_misses = len(self.mapHits.values()) - self.total_hits

if (len(self.all_x_hits) > 0):

self.fire_x_optimal = sum(self.all_x_hits) / len(self.all_x_hits)

self.fire_y_optimal = sum(self.all_y_hits) / len(self.all_y_hits)

self.trained = True

Now it's time to write the fire decision code. Our fire decision will use our averages and our range to make the decision.

Our code requires the difference to be within the X range AND the Y range. You could write this code to fire when either is selected, but that might cause the enemy to waste more shots.

def fire_decision(self, player_variables):

x_distance_from_average = abs(player_variables[0] - self.fire_x_optimal)

y_distance_from_average = abs(player_variables[1] - self.fire_y_optimal)

if (x_distance_from_average < self.max_distance_from_optimal):

if (y_distance_from_average < self.max_distance_from_optimal):

return True

return False

The last part of the brain updates will be updating our brain display.

We'll reuse the parts of the brain related to shot counts, but also add what our optimal firing position is.

def draw(self, screen):

super().draw(screen)

display_text("Total Bullets Fired: " + str(self.total_bullets), 960, 90, constants.WHITE, screen)

display_text("Total Bullets Hit: " + str(self.total_hits), 960, 120, constants.WHITE, screen)

display_text("Total Bullets Missed: " + str(self.total_misses), 960, 150, constants.WHITE, screen)

display_text("Optimal X Difference: " + str(format(self.fire_x_optimal, '.2g')), 960, 180, constants.WHITE, screen)

display_text("Optimal Y Difference: " + str(format(self.fire_y_optimal, '.2g')), 960, 210, constants.WHITE, screen)

display_text("Acceptable Range Constant: " + str(self.max_distance_from_optimal), 960, 240, constants.WHITE, screen)

Finally, update the Play class in gamestates.py to initialize with our new Learning Brain.

class Play(GameState):

def __init__(self, training_mode):

self.userBullets = pygame.sprite.Group()

self.player = Player(self.userBullets)

self.userGroup = pygame.sprite.Group()

self.userGroup.add(self.player)

self.enemies = pygame.sprite.Group()

self.enemyBullets = pygame.sprite.Group()

self.training_mode = training_mode

if game_utils.trained_brain:

self.play_brain = game_utils.trained_brain

else:

# Updated to use the Learning Brain

self.play_brain = brain.LearningBrain()

#

self.enemy = Enemy(self.enemyBullets, self.play_brain)

self.enemies.add(self.enemy)

Now play the game. You can start in training mode, to train the AI, before going into the main mode.

For an interesting test, spend a lot of time in training mode trying to trick the AI by getting hit when their shots are bad. Then go into play mode and play completely differently! When you start, the AI might struggle for a bit to adapt, but eventually it will start to figure out what you're doing.

There's still a lot you could do to create different kinds of learning AIs. The challenge is finding the right balance between explicitly telling the AI what to do (which players might be able to exploit) and letting the AI learn on its own (which takes time to train.)

In the next lesson, we're finally tackling the really interesting AI structure - a Neural Network.